Profiling in C/C++

Where to work on this task

-

Your own machine with Nix: The most comfortable way. Use

nix-shellto get the environment with all the dependencies needed (perf, hotspot, libopencv, libboost). -

Your own machine with Linux OS: You need to install (only a few) required tools from the repository (perf, hotspot, libopencv, libboost). Mac users may use instruments or Clien for profiling instead of perf and hotspot.

-

Remote access to our server: You may

ssh -X <username>@ritchie.ciirc.cvut.czto connect remotely to the Ritchie server, where Nix is installed. Then, work as you would work on your own machine with Nix. -

Computers in the laboratory: Nix is installed on the computers in the laboratory. Use

nix-shellto get the environment with all the dependencies needed (perf, hotspot, libopencv, libboost).

We will show how to use the perf profiler on tinyrenderer programm.

Task assignment

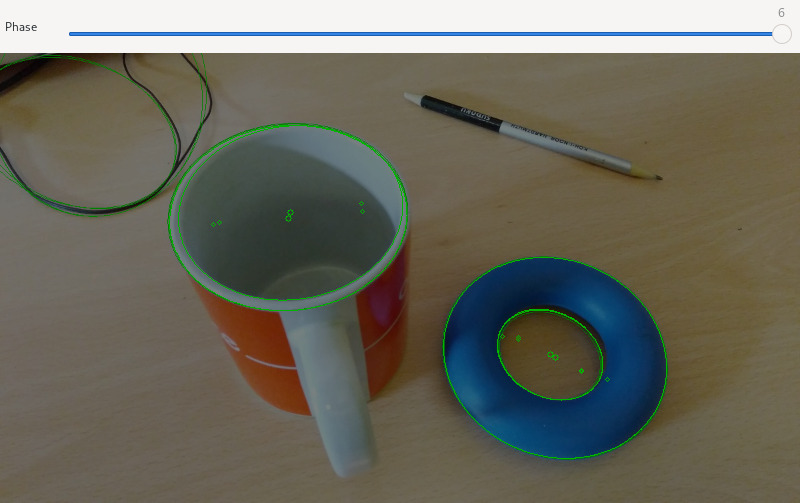

Imagine that you are a developer in a company which develops autonomous driving assistance systems. You are given an algorithm which doesn't run as fast as your manager wants. The algorithm finds ellipses in given picture and will be used for wheels detection of a neighbouring car while parking. Your task is to speed up this algorithm in order to run smoothly.

You probably need do following steps to achieve the desired speed-up:

-

Download the project from git repository

git clone https://gitlab.fel.cvut.cz/esw/ellipses.git -

Follow the build instructions in the README file.

-

Run the program. Either use it with your camera (use an integer as a command line argument) or use it with example images stored in the repository.

-

Profile the program (hints below). To achieve reproducible results, use images from the repository. Run the program with at least 1000 iterations of the algorithm and disable GUI (pass

no-guiargument). See the output offind_ellipse -hfor more information.The goal of the profiling is to find slow parts of the program.

perfand its graphical frontend Hotspot may help. -

Make changes that will improve execution speed. In particular, use reasonable compiler flags (the defaults are

-Og -g) and improve performance at the places discovered by profiling.Note

You are not allowed to modify the number of iterations and other parameters of the RANSAC algorithm!

-

Upload a patch file into the upload system and wait whether you pass the specified execution time limit. The patch will be applied using the

git applycommand, therefore, the best way to generate the patch is thegit diffcommand:git diff origin/master > ellipse.diff.txt(The

.txtextension is required by the upload system.)The elapsed time limit is set to be not worse than the reference solution plus 100 ms.

-

Automatic evaluation should finish with green message and assignment of the points. In such a case, your solution passed and will be checked manually. Otherwise, the automatic evaluation finishes with red message describing why the solution failed. In any other case, please, raise new issue in GitLab.

When compiling the project, use meson compile --verbose -C build to

see the compiler options used. Investigate those arguments.

To experiment with the compiler flags, you may create a new build directory:

meson setup improved-build --buildtype=plain

meson compile --verbose -C improved-build/

and set compiler options as you wish:

meson configure improved-build -Dcpp_args='-O0 -g'

meson compile --verbose -C improved-build/

When submitting the solution (as ellipse.diff.txt), include the

changes of meson.build in your patch.

Note

You may also need to add linker options like -Dcpp_link_args='-pg'.

Basic profiling techniques

How can we evaluate the efficiency of our implementation? Run time gives a simple overview of the program. However, much more useful are different types of information such as the number of performed instructions, cache misses, or memory references in respective lines of code in order to find hot spots of our program.

Measuring execution time

The easiest way to analyze program performance is to measure its execution time. There are multiple ways how it can be done in a C/C++ program:

-

the C time library, specifically the clock function. Note that in some systems (other than Linux), the clock resolution may not be as good as the options below.

-

More precision values can be obtained by using

high_resolution_clockin chrono C++11 library. -

On Linux, you can use directly the

clock_gettimefunction, which is used by the above options (on Linux). Note that this function uses virtual syscall mechanism, which makes it very fast.

GProf

GProf is a GNU profiling tool based on statistical sampling (every 1 ms or 10 ms). It collects the time spent in each function and constructs a call graph. The program must be compiled with a specific option, which adds profiling code to your program. All libraries you want to profile must be statically linked. When you run the program, profiling information is generated. Shared libraries can be profiled with sprof.

To use GProf:

- Set proper compiler and linker arguments to build the project with profiling code.

- Run the program. It will create

gmon.outif you did (1) well. - Examine the output. It is better to first redirect the output to a

file with

gprof ... > somefile, and see thesomefileafterwards. - To see the callgraph, use

gprof --graph ... > somefile.

Valgrind

Valgrind is an analysis tool for debugging and profiling. It works by emulating the CPU in software and executing your program with the emulated CPU. Therefore, your program run many times slower and the results do not need to correspond to the results when running on bare metal.

Cachegrind simulates behavior of the program under test in the relevance to the cache memory.

Callgrind simulates the program under test, recording the calls to the program's functions.

Outputs from these tools are the best viewed in the KCachegrind tool.

To use Cachegrind:

- Run the program under valgrind with

valgrind --tool=cachegrind ....

To use Callgrind:

- Run the program under valgrind with

valgrind --tool=callgrind .... - Examine the output with

kcachegrind.

Profiling using perf

Most modern processors have hardware performance counters that can count various hardware events (clock cycles, instructions executed, cache reads/hits/misses, etc.). Linux perf is a tool that can analyze programs by using these counters. This means, that you don’t have to modify the program to profile it.

The list of events that can be profiled with perf can be listed by:

perf list

You can see, that besides the hardware events, perf also offers software events (e.g. context switches or page faults) that are provided by the Linux kernel. Here, we'll focus on hardware events.

You can also use any hardware events listed in the appropriate reference manual for your CPU. For Intel processors it’s Intel® 64 and IA-32 Architectures Software Developer Manuals, specifically Volume 3B (Chapter 20) or the perfmon-events website.

Profiling with perf typically involves two steps:

- Recording profiling data with

perf record, and - displaying the results with

perf report,perf annotateor similar.

For a simple overview you can also use perf stat or perf top.

To profile a single program use e.g.:

perf record -e cycles ./program

This records profiling data into perf.data file and perf report

shows you a simple report of how much time was spent in each function.

You can also annotate each function (by pressing Enter) and see where

in in the function was spent most of the time.

In many cases, it's more useful, if we also know from where was each

function called. This can be achieved by storing not only the values

of performance counters together with the instruction pointer in the

profiling data, but also storing the full call graph – a list of call

site addresses. The result is much bigger amount of data to store and

therefore higher profiling overhead. Storing the call graph can be

enabled by the -g argument. This alone is typically sufficient,

because there are multiple ways how the call graph can be obtained and

the default (fp) often doesn't work:

-

--call-graph=fp(frame pointer) uses so called frame pointer, stored in every function frame on the stack, to obtain the call graph. This only works if the program is compiled with frame pointers. Typically, one has to add-fno-omit-frame-pointerto the compiler arguments. -

--call-graph=dwarfconstructs call graphs from debugging information. Requires compiling with-g. -

--call-graph=lbruses hardware Last Branch Record (LBR) feature of modern Intel CPUs. The CPU stores the call graph automatically in hardware andperfcan read it. This does not require any compiler options, but can be imprecise if the depth of LBR buffer is not bug enough to store the full call graph.

A few examples of perf usage: brendangregg/perf

Note

By default, using performance counters is not allowed without root privileges, so you cannot run perf on the computers in the lab.

When working on your own computer, you can enable access for non-root users by:

echo 1 | sudo tee /proc/sys/kernel/perf_event_paranoid

On the Ritchie server the value is set as the following:

$ cat /proc/sys/kernel/perf_event_paranoid

0

Search for the perf_event_paranoid in the kernel documentation for

more information.

An advantage of using perf is that it can profile not only a single

program, but a whole system, i.e., multiple programs including the

Linux kernel. This is useful because often, the performance problems

happen inside the kernel (e.g. due to its incorrect use by

applications). This mode can be enabled with the -a switch, e.g.:

perf record -a --call-graph=lbr

Note that if you want to see the kernel addresses without being root, you need to allow non-root users access information about kernel addresses, which disabled by default for security reasons:

echo 0 | sudo tee /proc/sys/kernel/kptr_restrict

Hotspot

The perf tool has a GUI called Hotspot that makes it easier to run the recording and analyze recorded data.

To use Hotspot:

- Run

hotspot(and Record Data). - Specify Application, Parameters, and Working Directory (

./). - Start Recording.

- View Results.

Handling performance counters directly from C/C++ program

If you are interested in performance counters, you can use it directly

from your C/C++ program (without any external profiling tool). See

perf_even_open manual page, or use some helper library built on kernel

API (libpfm, PAPI toolkit, etc.)

- http://man7.org/linux/man-pages/man2/perf_event_open.2.html

- http://perfmon2.sourceforge.net/

- http://icl.cs.utk.edu/papi/index.html

You can also access the performance counters (the hardware) directly

from your application (if supported by your HW and allowed by the OS).

However, this has the disadvantage that it's not clear who (program,

thread or OS kernel) caused the event to happen. The advantage of

using perf is that by being implemented in the Linux kernel, it

knows who currently runs on each CPU and "context switch" the event

counters to count events "per-thread".

Windows alternatives

We have no experience with Windows tools, however, there are a few free tools, for example, list on this page:

MS Visual Studio has profiler:

Another windows profilers

Also, Windows alternative to KCacheGrind – QCacheGrind:

If you do not want install any of these tools, you can work remotely on our server via ssh. Putty and Xming are your friends.

How to optimize execution time?

There are many ways how to optimize your programs, you can

-

play with Options That Control Optimization or generate code adjusted for your processor, e.g. with x86 Options,

-

avoid dynamic memory allocations in computation (e.g. sometimes, you can reuse existing arrays),

-

try to precompute values, replace exact values by approximations, simplify equations if possible,

-

inline functions,

-

parallelize (not in this case),

-

…

Several tips for optimizations (the referenced paper is quite old – some of the “tips” are already done by today's compilers, e.g., 14, 18).